OpenAI Launches o3 and o4-mini: Redefining the Future of AI Reasoning

OpenAI Launches o3 and o4-mini: Redefining the Future of AI Reasoning

The Dawn of a New AI Era: Understanding OpenAI's Latest Innovation

The AI world is abuzz with OpenAI's latest announcement—two new reasoning models that promise to redefine what's possible in artificial intelligence. On April 16th, 2025, OpenAI unveiled its o3 and o4-mini models, claiming they represent the most significant advancement in reasoning capabilities since GPT-4. These models arrive at a pivotal moment in AI development, as industries from healthcare to finance eagerly seek more sophisticated tools for complex problem-solving and decision-making.

But here's the pivotal question: Are these models truly another cornerstone in OpenAI's march toward AGI? Or are they merely transitional upgrades—temporary boosts to ChatGPT's commercial viability before being phased out? As industries hold their breath, the real impact remains to be seen: Will this dual release accelerate AI democratization, or inadvertently widen the gap between those with access to cutting-edge tools and those left behind?

In this comprehensive analysis, we aim to demystify what makes o3 and o4-mini truly different from their predecessors. We'll explore their technical foundations, examine their milestone significance for the AI industry, and most importantly, investigate how these powerful new reasoning models might transform various sectors—from programming and finance to education and scientific research.

Revolutionary Rather Than Evolutionary: OpenAI's Fundamental Breakthrough

The Reveal and Initial Claims

On April 16th, 2025, OpenAI officially unveiled its highly anticipated o3 and o4-mini models on their website. These revolutionary AI reasoning models represent a significant advancement in AI technology, with OpenAI boldly claiming they are "the most intelligent and powerful reasoning models to date." What makes the o3 model features particularly noteworthy is their full tool access capabilities, which include web search, Python data analysis, visual reasoning, and image generation—essentially integrating multiple AI functions into a single, comprehensive system.

During the official YouTube livestream presentation, OpenAI demonstrated how the new multimodal AI models interact with various content types, emphasizing that o3 stands as their state-of-the-art reasoning model, while o4-mini capabilities are designed for scenarios requiring fast and cost-effective reasoning. This strategic dual release of new AI releases 2025 appears intended to address different market segments with varying needs for processing power versus efficiency.

Executive Statements and Integration Announcements

The buildup to this release was carefully orchestrated, with OpenAI CEO Sam Altman dropping hints on Twitter about the new generation of reasoning models. Interestingly, OpenAI chose to skip the "o2" designation entirely, moving directly from the previous o1 model to o3—perhaps suggesting a quantum leap in capabilities rather than an incremental improvement.

In parallel with OpenAI's announcement, Microsoft revealed on their Azure official blog that both OpenAI o3 and o4-mini have already been integrated into the Azure OpenAI service. This rapid integration highlights the strategic importance of these best AI models 2025 to Microsoft's AI offerings, with specific mentions of their ability to support multi-API and multi-tool parallel calls—a significant enhancement for enterprise-level agent workflows and automated task management AI systems.

Technical Foundations: How ChatGPT o3 and o4-mini Work

Architectural Principles and Training Methodology

At their core, o3 and o4-mini belong to OpenAI's "o series" of reasoning models. What distinguishes these models from their predecessors is their training methodology, which emphasizes extended "thinking time." To understand this concept, imagine giving a human more time to contemplate a complex problem rather than demanding an immediate answer—the o series models are trained to simulate this deeper cognitive process, resulting in improved reasoning capabilities for multi-step complex tasks.

These AI reasoning models are fundamentally multimodal, meaning they can process and integrate various input types simultaneously—text, images, code, and more—to formulate cohesive responses. The thinking with images AI capability represents a significant step beyond earlier models that were primarily text-focused. Think of it as the difference between trying to solve a problem with just written descriptions versus having access to diagrams, photographs, and executable code all at once.

Differentiating Features: o3 vs. o4-mini

While both models share a common architectural philosophy, they serve different purposes within OpenAI's ecosystem:

The OpenAI o3 model stands as their most powerful reasoning system, excelling particularly in coding, mathematics, scientific reasoning, and visual tasks. One of its most notable abilities is generating and critically evaluating new hypotheses—similar to how a scientist might propose and then test a theory. According to OpenAI, this approach has led to a 20% reduction in significant errors compared to previous models, making it one of the most reliable AI coding assistants available.

In contrast, o4-mini is a smaller, more cost-efficient model that has been specifically optimized for mathematics, coding, and visual task performance. Its design prioritizes higher usage volume and is ideally suited for high-throughput scenarios where many requests need processing in rapid succession. The o4-mini capabilities make it the practical workhorse compared to o3's more comprehensive but resource-intensive approach.

Performance Optimization and Benchmarking: Quantifiable Leaps in AI Capability

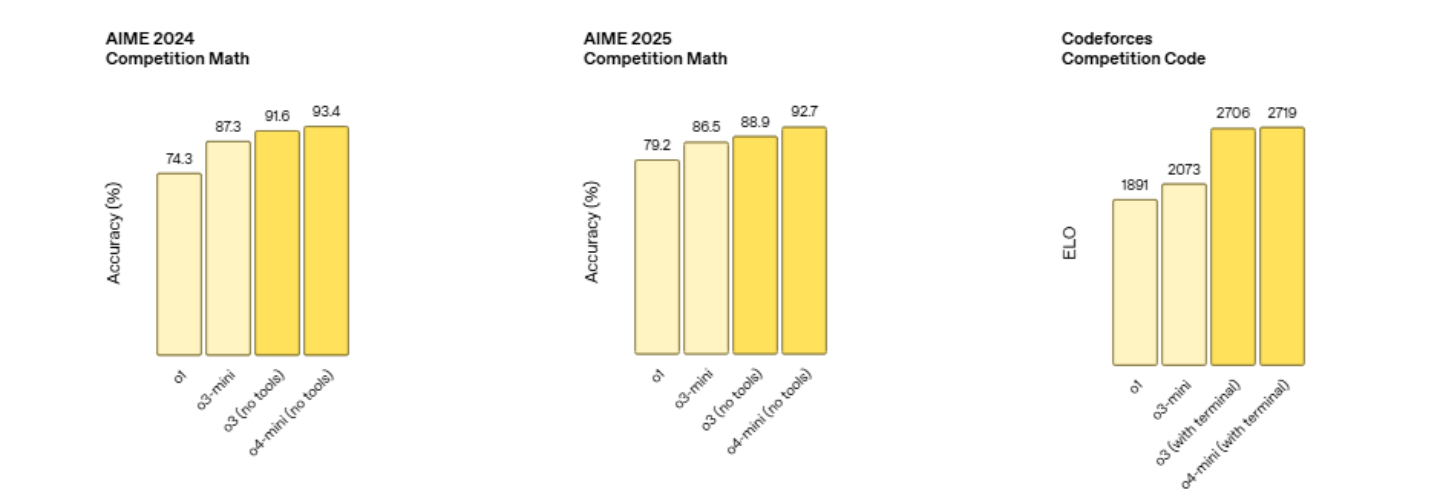

Both models have demonstrated remarkable performance improvements across rigorous objective benchmarks. In the prestigious AIME 2024 Competition Math benchmark, o3 with Python tools reached an impressive 98.7% accuracy compared to o1's 74.3%—representing a dramatic leap in mathematical problem-solving capabilities.

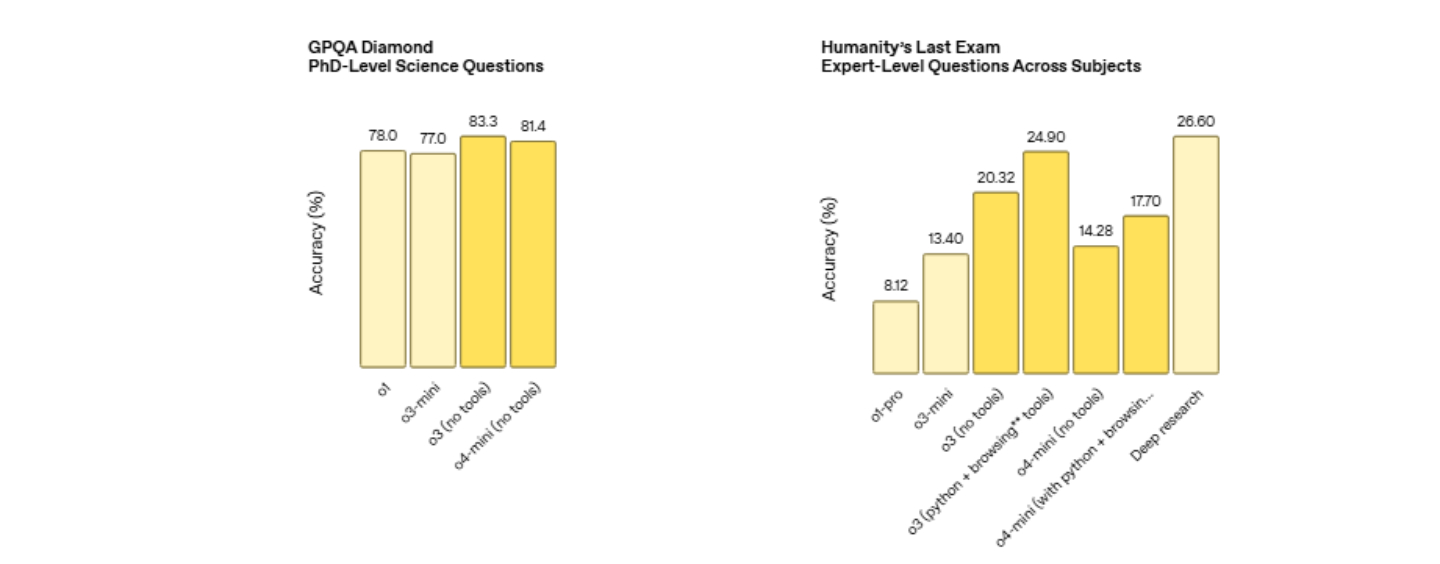

For Codeforces Competition, o4-mini with terminal access achieved a remarkable 2719 Elo rating, far exceeding o1's 1891 rating. Even in PhD-level scientific reasoning measured by the GPQA Diamond benchmark, o3 without tools reached 83.3% accuracy compared to o1's 78%.

What's particularly noteworthy is o4-mini's stellar performance despite its smaller size—achieving 93.4% accuracy on AIME 2024 and maintaining a robust 81.4% accuracy on PhD-level scientific questions. This suggests OpenAI has made significant strides in optimizing smaller models for specific applications without sacrificing performance.

Furthermore, o3 equipped with Python and browsing tools achieved an impressive 24.9% accuracy on Humanity's Last Exam, nearly matching DeepResearch while operating at significantly faster speeds than DR could ever achieve. This performance is particularly notable when facing cross-disciplinary expert-level questions, demonstrating the model's superiority in handling extremely complex scenarios.

It's evident that o3 and o4-mini have made tremendous advancements in solving difficult real-world tasks. They not only reduce error rates through rigorous analysis and improve mathematical reasoning and programming capabilities, but they also excel beyond just STEM fields. In handling non-technical tasks (such as business and creative work) and data science applications, they outperform the previous generation o3-mini with greater effectiveness and efficiency. This comprehensive capability enhancement signals that AI reasoning abilities are progressing toward greater generalization and human expert-level performance.

Competitive Analysis and Comparative Performance

Performance Metrics

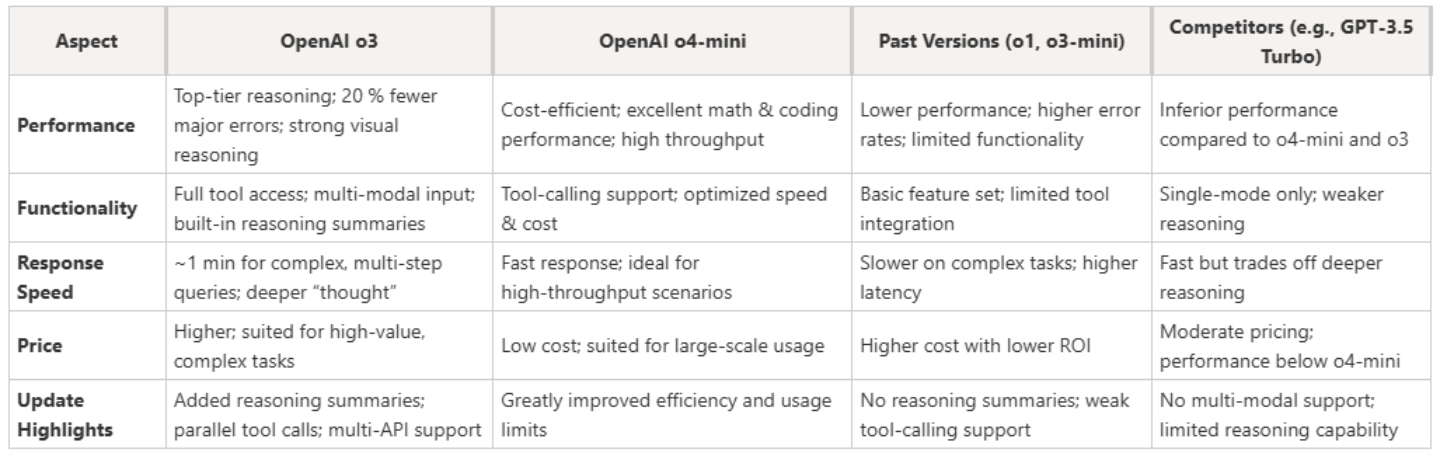

OpenAI claims that o3 and o4-mini represent significant upgrades from o1 and o3-mini, with substantial improvements in both performance and efficiency. While o3 provides the most advanced capabilities, o4-mini offers a more cost-effective solution for scenarios requiring high-frequency API calls.

It's worth noting that competitors like GPT-3.5 Turbo fall short in both performance and multimodal capabilities compared to even the o4-mini model. Additionally, according to reports, the release of these models has delayed the launch of ChatGPT-5, indicating OpenAI's confidence in their standalone capabilities.

Real-World Applications Across Industries

Having examined the impressive benchmark performance and technical capabilities of o3 and o4-mini, a critical question emerges: How do these performance breakthroughs translate into tangible benefits for various industries? While benchmarks demonstrate theoretical capabilities, the true measure of these AI reasoning models lies in their practical implementation across diverse sectors. The following sections explore how organizations are already leveraging these advanced models to transform their operations, solve previously intractable problems, and create new possibilities that were unimaginable with previous generations of AI technology.

1. Financial Sector Implementation

In the financial industry, the o3 model is already being utilized for automating risk assessment and predictive modeling. Its ability to analyze vast amounts of historical financial data allows it to identify trends and predict market movements, thereby supporting real-time decision-making processes. This represents a significant advancement over previous-generation AI systems that often required human interpretation of their outputs before application to financial decisions.

To illustrate this practical application: imagine a financial institution needing to evaluate the creditworthiness of thousands of loan applicants daily. The OpenAI o3 model can simultaneously analyze traditional metrics (income, debt ratios) alongside unconventional data points (payment histories, spending patterns), while contextualizing these within broader economic trends—all within a time frame that allows for nearly immediate decision-making.

2. Programming and Technical Support Solutions

The o3 model has demonstrated exceptional performance on the programming competition platform Codeforces, helping developers solve complex programming problems with improved code quality and efficiency. This capability extends beyond simple code generation to include sophisticated problem-solving approaches that might otherwise require experienced human developers, making it an indispensable AI coding assistant.

For instance, rather than merely suggesting code snippets, the o3 model can analyze a complex programming challenge, decompose it into logical components, propose multiple solution strategies, evaluate their respective efficiency, and finally implement the optimal approach—all while providing explanatory comments that help developers understand the reasoning behind each decision.

3. Scientific Research and Educational Applications

Both AI reasoning models have shown impressive performance in mathematics and scientific examinations, supporting complex scientific problem-solving, hypothesis validation, and educational applications. Their ability to reason through multi-step problems makes them valuable tools for both researchers and educators.

In an educational context, these models could provide students with personalized guidance through complex scientific concepts, adapting explanations based on the student's demonstrated understanding and learning pace. For researchers, the models can help validate hypotheses by identifying potential logical flaws or suggesting alternative interpretations of experimental data.

4. Enterprise Automation Integration

Microsoft's Azure has integrated both o3 and o4-mini to support businesses in building multi-agent automated workflows. This integration enables parallel tool calling and automatic execution of complex tasks, potentially transforming how enterprises approach process automation and automated task management AI implementation.

Consider a customer service application: rather than having separate AI systems for initial query classification, information retrieval, and response generation, an o3-powered workflow could simultaneously access customer history, query product databases, check shipping status, and generate a comprehensive response—all within a single process flow that mimics how a human customer service representative would handle the inquiry.

5. Visual Content Analysis and 3D Model Generation

Both multimodal AI models demonstrate advanced capabilities in analyzing images, charts, and visual data, generating comprehensive textual interpretations that can be applied to data analysis and creative design processes. This represents a significant step forward in bridging the gap between visual and textual information processing.

For example, when presented with a complex data visualization, these AI image reasoning models can not only describe what they "see" but can also interpret trends, identify anomalies, suggest alternative visualization approaches, and even generate code to reproduce or improve the visualization—effectively serving as both analyst and visual communication advisor.

Additionally, the 3D model generation AI capabilities allow for creating detailed three-dimensional representations from textual descriptions or 2D references, opening new possibilities for design, architecture, and entertainment industries.

FAQs About OpenAI o3 and o4-mini

Q: What is the main difference between OpenAI o3 and o4-mini?

A: o3 is OpenAI's latest large multimodal reasoning model, excelling at complex text and image tasks; meanwhile, o4-mini maintains core multimodal capabilities while significantly reducing model size, optimizing inference speed and resource utilization, making it more suitable for edge deployment and mobile applications.

Q: How can I access these models on the OpenAI platform?

A: In the OpenAI API, simply set the model parameter to either "o3" or "o4-mini". Like other models, you can access them through official SDKs (Python, Node.js, etc.) or by sending direct HTTP requests.

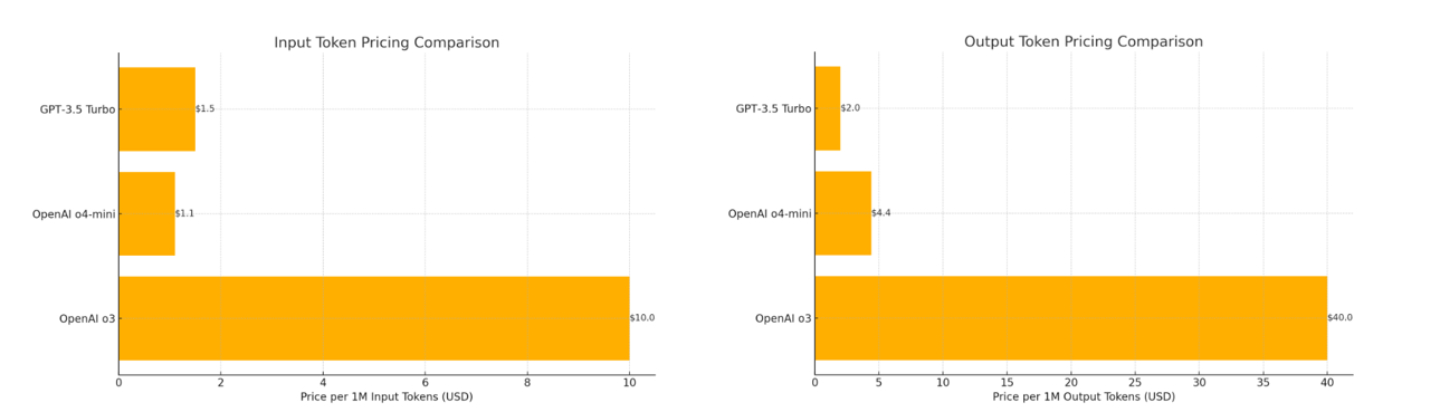

Q: What are the pricing and rate limits for o3/o4-mini?

A: Both models use token-based usage pricing, with o3 costing slightly more than o4-mini. Specific prices can be found on the OpenAI pricing page. Rate limits (QPS) depend on your subscription tier and organizational quota, which can be increased through the management dashboard.

Q: How do these AI reasoning models perform for code generation and mathematical reasoning?

A: o3 demonstrates excellent performance on the latest programming benchmarks (such as HumanEval), capable of generating high-quality functions and algorithms. o4-mini, with its smaller parameter count, performs slightly less impressively but still satisfies most requirements for common scripts and templated code generation.

Q: Is it possible to fine-tune o3/o4-mini or use custom instructions?

A: Currently, both models support customization through prompt engineering and system messages to modify output style. While full fine-tuning interfaces haven't been released, developers can achieve a degree of behavioral customization through mechanisms like function_call and tooling.

Q: What are the approximate latency and throughput figures for these models?

A: o3 has a per-inference latency of approximately 300-500ms (depending on input length) and throughput optimized for batch processing. o4-mini reduces latency to 100-200ms with higher QPS per instance, making it more suitable for latency-sensitive applications.

Q: How can I reduce the hallucination rate in these models?

A: You can enhance factual accuracy by providing more context in prompts, using few-shot examples, enabling temperature=0, or applying logit_bias to prefer specific outputs. Additionally, implementing post-processing confidence detection for critical outputs is recommended.

Q: What multimodal inputs does o4-mini support?

A: Currently, text and image inputs are supported, with potential future expansion to audio or video. For images, preprocessing to compliant Base64 encoding is required before attaching the appropriate fields in API requests.

Q: What built-in mechanisms exist for safety and content moderation?

A: Both models incorporate content filters that automatically identify sensitive topics including violence, hate speech, and sexual content. Developers can integrate custom supervision rules and third-party moderation systems to ensure compliance of both upstream prompts and downstream responses.

Q: How do these models compare to other new AI releases 2025?

A: As part of the best AI models 2025 lineup, o3 and o4-mini offer unique combinations of reasoning capabilities, multimodal understanding, and tool integration that set them apart from competitors. The o3 vs GPT-4 comparison shows significant improvements in several key performance metrics.

Q: Are there community plugins or open-source tools to accelerate development?

A: Several community SDKs (such as openai-sdk-x), VSCode extensions, and Jupyter Lab plugins are available on GitHub, enabling rapid experimentation, debugging, and deployment of o3/o4-mini in local environments.

Conclusion: OpenAI's o3 and o4-mini -- A Significant Step Forward with Remaining Questions

The introduction of OpenAI's o3 and o4-mini models represents a substantial advancement in AI reasoning capabilities. These multimodal AI models demonstrate impressive strengths across multiple dimensions that make them potentially transformative tools for businesses, developers, researchers, and educators.

Their comprehensive tool access—integrating web search, Python data analysis, AI image reasoning, and 3D model generation AI—eliminates previous barriers between specialized AI systems. The significant performance improvements, including a 20% reduction in major errors for o3 and exceptional mathematical reasoning for o4-mini, push the boundaries of what's possible with current AI technology. The dual-model approach cleverly addresses different market needs: o3 serving as the premium, highest-capability option, while o4-mini offers a cost-effective alternative for high-volume applications.

However, the widespread adoption of these powerful reasoning models raises legitimate concerns that warrant careful consideration. As these systems demonstrate near-human performance in domains like financial analysis, programming, and scientific research, we may witness significant workforce disruptions. Jobs that primarily involve pattern recognition, data analysis, and predictive modeling could face substantial transformation or elimination. Additionally, there's the risk of organizational over-dependence—as systems become more capable, the knowledge and skills to critically evaluate their outputs might diminish, creating vulnerability should these systems fail or malfunction.

What's undeniable is that o3 and o4-mini represent a significant evolutionary step in AI development—combining multiple previously separate capabilities into integrated systems with enhanced reasoning abilities. Their arrival signals a shift toward more thoughtful, reliable, and transparent AI systems that could expand the technology's application into domains previously considered too complex or high-stakes for automated reasoning. As new AI releases 2025 continue to emerge, OpenAI's reasoning models are setting a new benchmark for the industry to follow.

Written by

VelvetRose

Soft as velvet, strong as thorns—I am a rose, one of a kind.

User Reviews

Blog

Client-side Reviews

Reviews

VelvetRose

Soft as velvet, strong as thorns—I am a rose, one of a kind.

Subscribe to Newsletter

No reviews yet. Be the first to review!